Optimizing AI Model Performance and Cost-Efficiency on AWS

Building scalable AI workflows for high-performance predictions

Project background

Overview

Our client, a data-driven company specializing in predictive analytics, relied on AI models to deliver real-time insights. However, their existing models suffered from high latency, excessive compute resource usage, and inconsistent accuracy. The challenge was to develop more efficient AI models that could process large volumes of data faster while ensuring scalability and cost-effectiveness. The project required improvements at both the model architecture level and deployment strategy to balance speed, accuracy, and infrastructure efficiency.

Project Goals

- Develop AI models with optimized architectures to improve inference speed and accuracy.

- Reduce the computational cost of model training and deployment by at least 40%.

- Implement an adaptive learning system that retrains models only when necessary.

- Build scalable AI operations that balance high availability with cost efficiency.

- Webapp

- 4team members

- 900+hours spent

- AI & Analyticsdomain

Challenges

- Existing AI models had high latency, making real-time predictions impractical.

- Inefficient feature selection led to slow processing and unnecessary computational overhead.

- Frequent retraining cycles consumed excessive cloud resources, driving up operational costs.

- Deployment inefficiencies resulted in suboptimal resource allocation for inference workloads.

Our approach

Solution

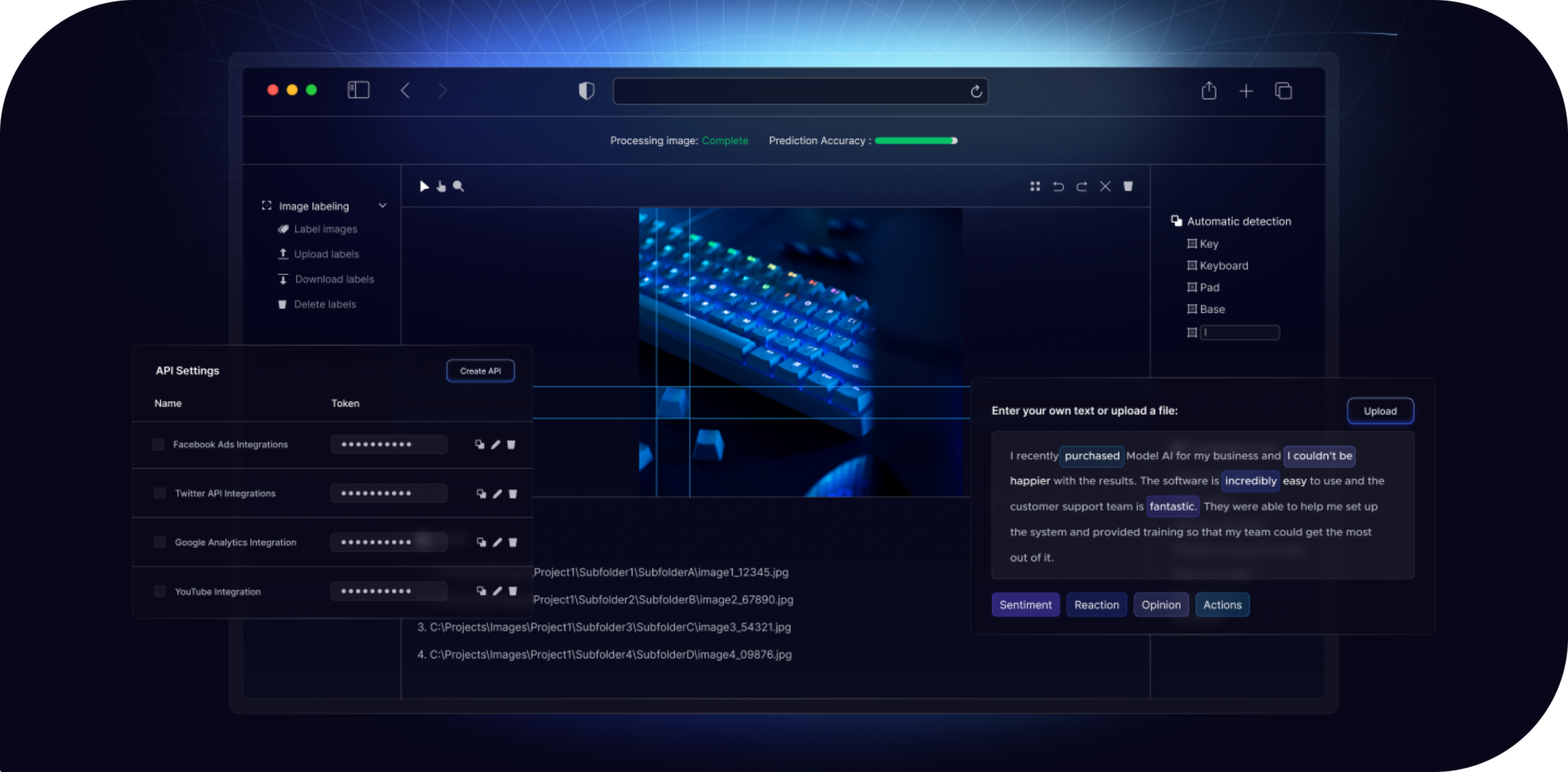

To tackle these challenges, we redesigned the AI model architecture, incorporating transformer-based networks and optimized convolutional layers. By applying pruning and quantization techniques, we significantly reduced model size and improved inference performance without compromising accuracy. The introduction of automated feature selection helped streamline data processing, ensuring that only the most relevant inputs were retained.

For model training, we leveraged distributed learning with TensorFlow and PyTorch, implementing Horovod to accelerate computation across multiple GPUs. Instead of retraining models at fixed intervals, we introduced an adaptive learning mechanism that triggered updates only when meaningful shifts in data patterns were detected. This not only reduced unnecessary training cycles but also optimized the use of computing resources.

On the deployment side, we implemented dynamic batching strategies to maximize GPU utilization, ensuring that inference requests were processed efficiently. By hosting multiple AI models on shared SageMaker Multi-Model Endpoints, we minimized infrastructure costs while maintaining high availability. Real-time performance monitoring was integrated with CloudWatch and a custom AI tracking framework, enabling proactive detection of model drift and performance degradation.

Team

The project was executed by a team of an AI researcher, a machine learning engineer, a cloud architect, and a DevOps specialist. Their combined expertise allowed us to deliver a well-optimized solution that met the client’s needs.

Results

The project delivered a 3x improvement in inference speed, significantly enhancing real-time prediction capabilities. Predictive accuracy increased by 12%. Distributed learning techniques reduced training time by 40%, optimizing computational efficiency.

Deployment speed increased by 50% with the implementation of automated CI/CD, enabling faster updates and iteration on machine learning models. With better resource utilization and improved monitoring, the company was able to scale its AI workloads while maintaining cost efficiency and allowed a sustainable approach to machine learning operations.

Tools and tech stack

More Projects

- chatbot

- reactnative

- mobileapp

- saas

- python

- webapp