AI in software development – tools that work

Artificial intelligence is rapidly changing how modern software is built. Just a few years ago, many AI applications in software development were more theoretical than practical. Today, this is no longer the case. At Valletta Software, we’ve been actively integrating AI into our development processes. Not as an experiment, but as a working tool that saves time, improves quality, and helps our teams and clients achieve better results.

We view AI not as something that will replace developers, but as a tool that complements them: automating routine steps, assisting with complex analysis, and streamlining tasks that used to take hours or even days. From requirements analysis and code generation to testing, refactoring, and writing documentation, AI now plays a role at every stage of the development cycle.

In this article, we’ll share our experience applying AI in real-world projects. You’ll see what actually works, where the limitations are, and how companies can adopt these tools in a way that is both realistic and effective. We’ll also provide examples of concrete results we’ve achieved: time savings, quality improvements, and new capabilities that were difficult to implement with traditional methods alone.

This is Part 1 of our two-part deep dive into how AI is transforming software development. In this first part, we’ll cover how AI helps in requirements analysis, code generation, refactoring, testing, documentation, and other core development processes. In Part 2, we’ll focus on the challenges and limitations of AI in software engineering, as well as break down common myths and the real-world benefits of AI adoption.

Key Areas Where AI Helps in Software Development

Based on our project experience at Valletta Software, AI is already providing value in several key areas of the software development lifecycle. Let’s look at them one by one:

1. Requirements Clarification and Analysis

AI tools can analyze initial client inputs (text documents, presentations, emails) and help structure them into clearer requirements. Using large language models (LLMs), we generate clarifying questions and identify inconsistencies early in the process. This reduces the back-and-forth between business stakeholders and developers and ensures that projects start on a solid foundation.

2. Specification Drafting and Backlog Generation

Once requirements are clear, AI helps us draft technical specifications and even suggest initial user stories and backlog items. This speeds up sprint planning and improves the quality of our documentation. We often combine this with expert review to ensure correctness and feasibility.

3. Code Generation and Boilerplate Automation

While AI can’t replace human creativity or engineering expertise, it is very effective at generating boilerplate code, setting up project scaffolds, and automating repetitive coding tasks. This allows our developers to focus on higher-level architecture and business logic.

4. Refactoring and Code Quality Improvements

AI-powered code review and refactoring tools help us detect potential issues early. We’ve successfully used AI to suggest performance optimizations, improve code readability, and align code with internal style guides.

5. Automated Testing

AI can generate unit tests, integration tests, and even exploratory test scenarios based on requirements and code structure. This significantly accelerates our testing phase and increases test coverage without overwhelming manual testers.

6. Documentation

From API docs to internal developer guides, AI is proving very helpful in generating well-structured, easy-to-understand documentation. This is especially useful when working with fast-changing codebases where documentation can easily lag behind.

7. Automating Routine Tasks

We also apply AI to automate routine tasks that would otherwise distract developers from more meaningful work. This includes generating release notes from commit messages, keeping changelogs and dependencies up to date, scaffolding boilerplate code for new services, and automating test data creation or environment setup scripts. By offloading these repetitive activities to AI tools, we free up developer time for complex problem-solving and architecture work.

Real-World Use Cases from Our Projects

We’ve already implemented AI-driven techniques in various client projects. Here are some examples of how this works in practice:

Task Estimation and Resource Planning

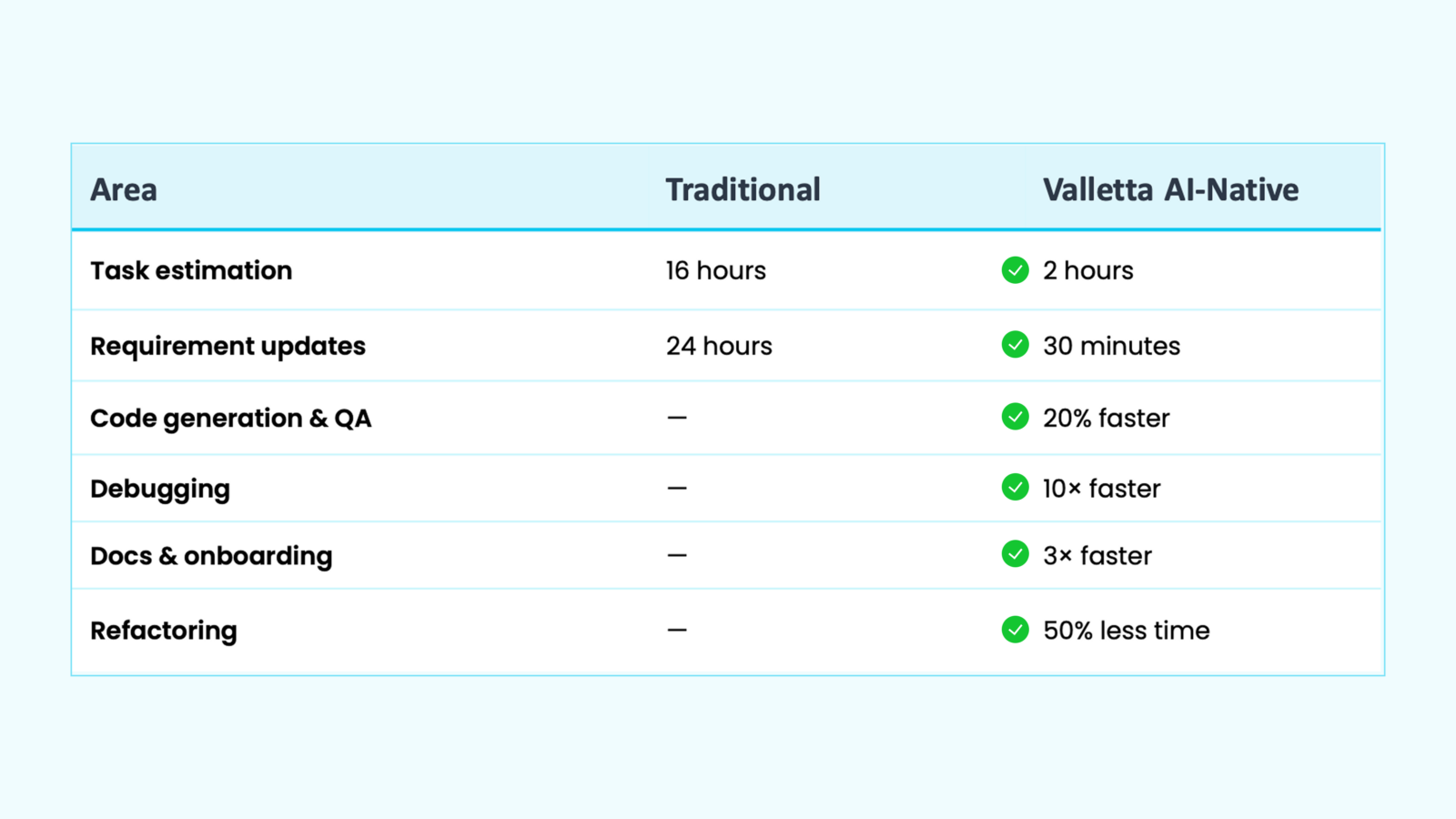

AI dramatically reduces the time needed to create accurate project estimates. Using LLM-based pipelines, we now automate decomposition of project requirements and initial effort estimation, bringing the preparation time down from 10–16 hours to just 1–2 hours of expert review. AI also helps compare different versions of client specs, cutting review time from 12–24 hours to about 30 minutes, which is essential for projects with frequently changing requirements.

AI-Supported Code Generation

We use AI to generate certain types of boilerplate code, including API endpoints, DTOs (Data Transfer Objects), and database schemas. This allows our developers to focus on architecture and business logic instead of repetitive coding. To reduce the risk of hallucinations from LLMs, we combine model outputs with Retrieval-Augmented Generation (RAG) techniques, feeding models relevant project context and checking that their suggestions align with actual system requirements.

AI for Code Refactoring and Analysis

One of our most successful cases involved using AI to optimize a highly complex SQL stored procedure without having access to sensitive production data. The AI helped refactor the logic and suggest optimizations based on a test dataset. The result was a 10x speedup in the procedure’s execution and a much faster refactoring process compared to manual analysis.

Test Case Generation for Critical Systems

We apply AI to generate automated test cases based on functional specifications and API contracts. Using RAG, we make sure the AI has up-to-date system knowledge before generating test logic. These tests are integrated both on the backend and frontend, speeding up test coverage and helping our QA engineers focus on exploratory and regression testing.

AI-Assisted Documentation for Developer Handover

AI also supports technical writing by generating first drafts of API documentation, internal architecture guides, and onboarding materials. In this area, we’ve observed a 3x acceleration of documentation workflows, helping us onboard new team members faster and maintain more consistent project knowledge.

AI Tools We Actually Use in Development

In practice, many AI tools look promising on paper but do not hold up in real-world software projects. Over time, we have identified a toolkit that reliably supports our development processes and integrates well with our workflows.

For code generation and completion, we actively use GitHub Co-Pilot, Cursor, and OpenAI Codex. These assistants help during day-to-day programming, speeding up the writing of boilerplate code, suggesting function implementations, and assisting with refactoring. Additionally, our developers benefit from IntelliJ AI Assistant and Claude.AI Desktop when handling complex tasks like structured text generation or formal documentation.

For automated refactoring, Swimm has proven to be a valuable addition. It not only helps streamline refactoring workflows but also ensures consistency across large codebases.

AI-powered testing is another area where we see practical results. We employ Testim and Applitools to automate UI testing and verify visual consistency, which complements our RAG-based backend and API test generation process.

Finally, for predictive analytics and low-code prototyping, we use ML tools and platforms. These tools help us quickly prototype dashboards, internal utilities, and client-facing reports, reducing the time needed to bring data-driven insights to stakeholders.

Of course, the AI tool landscape evolves rapidly, and we continuously test new options. But as of today, this stack covers the majority of our productive use cases and allows us to integrate AI meaningfully into every phase of the development lifecycle.

Comparing LLMs in Practice: GPT, Claude, DeepSeek

One area where we have invested significant time is in comparing the performance of different LLMs across typical software engineering tasks. Specifically, we focused on three popular models: GPT (mainly GPT-4), Claude (versions 3 and 3.5), and DeepSeek.

We have already published a detailed analysis of these comparisons in a separate article – read the full breakdown here. Below is a short summary of our hands-on findings.

Our current findings show that GPT and DeepSeek are delivering stronger results for text-based deliverables, such as project documentation. They generate more coherent and contextually accurate outputs, which makes them suitable for creating technical documentation and user guides. Claude.AI Sonnet models, on the other hand, outperform in code generation tasks, especially when working with structured data or formalized lists. They exhibit significantly fewer hallucinations and better maintain consistency when modifying codebases.

When it comes to solving one-off algorithmic problems (for example, during prototyping or experimenting with new approaches), DeepSeek models have proven particularly efficient. They generate innovative and performant solutions that can be used as starting points for further optimization by human developers.

In the next part of this series, we’ll take a closer look at the challenges and limitations of using AI in software development, as well as discuss the myths and realities surrounding AI’s true impact on development speed and productivity.